Amazon S3 rewrite rule limit

I’m in the process of moving this site to Amazon S3, rather than hosting it myself on a VPS. Should save me around $10 a month, but more importantly mean I wont have to look after a server. Furthermore, I could take advantage of the new SSL support.

Unfortunately over the years I’ve changed the structure of my site quite a lot, but I’ve tried my best to keep them working (everyone knows cool URIs don’t change). So much so, I have automated tests to ensure I keep old URIs working. So I need some way of setting up redirects.

This all seemed fine when I found I could configure redirects, so I started on some scripts to convert my Nginx redirect rules to the XML format used by S3.

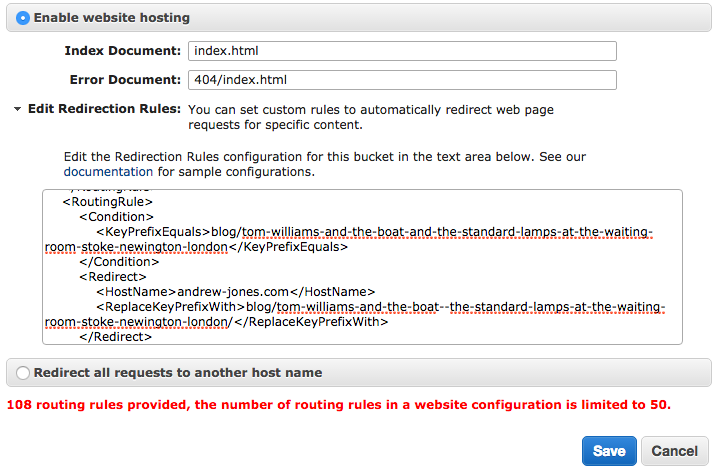

But then when I try to upload them, I find S3 has a hard limit of 50 redirect rules…

Not sure what to do now. Do I move to S3 and break many of my URIs? Or continue to host a static site on a VPS or similar?